What is it about leaks that makes them different from other news events? If the statements of governments and media organizations are anything to go by, it’s file size that counts.[1] The UK Law Commission’s recent consultation document on the Protection of Official Data suggests that maximum sentences for unauthorized disclosure under the Official Secrets Act ought to be increased because “[i]n the digital age, the volume of information that can be disclosed without authorization is much greater” (United Kingdom Law Commission 2017).

What is it about leaks that makes them different from other news events? If the statements of governments and media organizations are anything to go by, it’s file size that counts.[1] The UK Law Commission’s recent consultation document on the Protection of Official Data suggests that maximum sentences for unauthorized disclosure under the Official Secrets Act ought to be increased because “[i]n the digital age, the volume of information that can be disclosed without authorization is much greater” (United Kingdom Law Commission 2017).

Media organizations, too, have adopted this metric. When reporting on the Panama Papers began in 2016, the primary claim made by the news organizations involved was that it was big, very big. Suddeutsche Zeitung, the original recipient of the data from the Mossack Fonseca law firm, claimed that the 119 million documents were “more than the combined total of the WikiLeaks Cablegate, Offshore Leaks, Lux Leaks, and Swiss Leaks” (Obermeier et al. 2016) and produced an infographic comparing those respective disclosures on the basis of their gigabyte count. The Guardian confidently stated that the Panama Papers were “history’s biggest leak,” again suggesting that file size should be directly correlated with significance (Harding 2016).

In fact, file size as a metric tells you next to nothing about the volume of the information actually disclosed to journalists, never mind the popular resonance or political valency of particular revelations.[2] It also places a great deal of emphasis on the role of traditional gatekeepers of information. Or, to put it another way, as a way of understanding leaks, it misses almost everything that is important.

What primarily distinguishes leaks from the other unofficial disclosures of information that are the journalist’s stock in trade is not the amount of information disclosed to journalists, but the amount of original source material made accessible to the public. This public input changes the dynamics of how news is produced and how narratives are formed, bringing a multiplicity of voices into areas of decision-making that were formerly reserved for insiders. In the absence of a clear understanding of how leaks land in particular instances and what factors inform their reception, some have been tempted to see the dispersion of interpretative power as a problem: this, too, is mistaken.

Adam Fish and Luca Follis’s essay on the “temporality” of leaks points toward a promising way of thinking about large-scale document disclosures. It is clearly the case that the major disclosures of the past seven years have had a long political half-life. This persistent—”slow”—quality, the ability to inform political debate long after most news stories have been forgotten, is a key defining quality of leaks and one of the major reasons why they have become a significant political phenomenon.

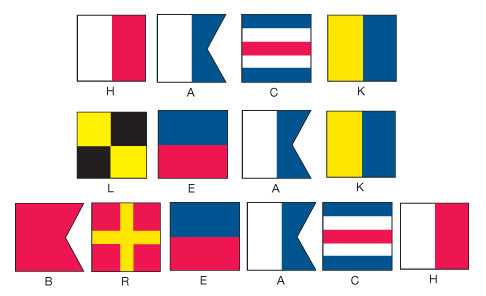

The consequences of Chelsea Manning’s whistleblowing are pre-eminent and inescapable in any serious treatment of this topic. The U.S. State Department cables that WikiLeaks began to publish in November 2010 remain a standard reference point for anyone writing about international affairs nearly seven years later, while the Afghanistan and Iraq War logs continue to be the subject of academic analysis in fields as diverse as epidemiology, statistics, geography, and war studies.[3]

Manning’s disclosures remain unsurpassed in their global impact, but less comprehensive document collections have also had a longer shelf life than might be expected. In early 2010, hacktivists liberated a cache of emails from U.S. security consultancy HB Gary. This brought to the surface at least one news story of major significance—that Bank of America had commissioned a group of private companies to disrupt WikiLeaks and its support base—but also formed the basis of Project PM, a crowdsourced investigation into the organizational ecology of security contractors in the United States that was only disrupted by the arrest of its founder and moving spirit in 2012.

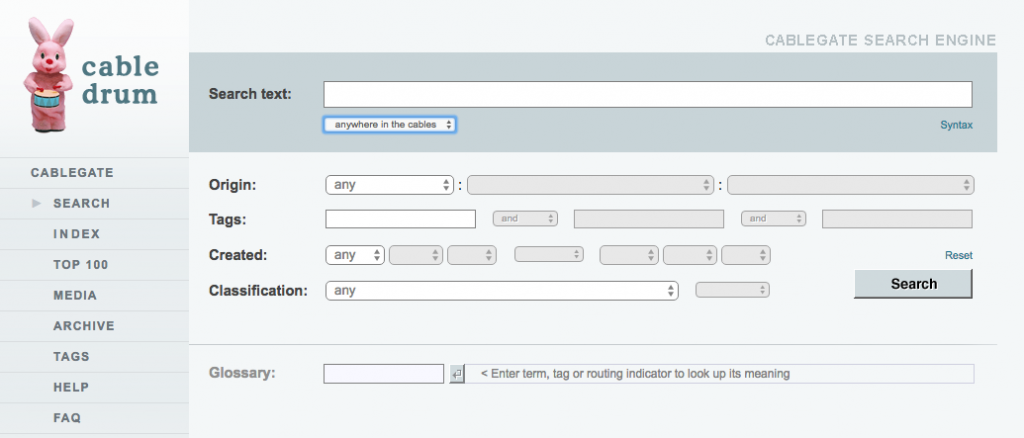

What these examples have in common is that original source documents were made available to the general public in the form of a searchable database and that this was an integral part of the publication strategy, not an afterthought. Particular groups of documents lend themselves more easily to being organized in a database than others (the State Department cables, prepared in a consistent format with unique identifiers and metadata tags designed to be machine readable, are again a key example), but searchable archives are an important reason why some document caches are able to persist beyond short-term news values.

Fish and Follis recognize that publication strategy influences how leaks are received, but no disclosure happens in a vacuum. They attempt to draw distinctions between “fast” and “slow” leaks on the basis of purposeful editorial decision-making—how much information is being released? is Is it tied in to a particular news event?—but their analysis fails to take sufficient account of the context in which publication decisions make a difference. As a result, they fall into the trap of comparing information environments—like election campaigns and court cases—rather than the role leaks play within them. A separate problem is that their characterization of publication strategies doesn’t quite fit what actually happened. This, for example, is what they say about Edward Snowden’s “slow” disclosures, which they contrast with the Democratic National Committee (DNC) and Podesta emails:

Fish and Follis recognize that publication strategy influences how leaks are received, but no disclosure happens in a vacuum. They attempt to draw distinctions between “fast” and “slow” leaks on the basis of purposeful editorial decision-making—how much information is being released? is Is it tied in to a particular news event?—but their analysis fails to take sufficient account of the context in which publication decisions make a difference. As a result, they fall into the trap of comparing information environments—like election campaigns and court cases—rather than the role leaks play within them. A separate problem is that their characterization of publication strategies doesn’t quite fit what actually happened. This, for example, is what they say about Edward Snowden’s “slow” disclosures, which they contrast with the Democratic National Committee (DNC) and Podesta emails:

Although the[y] sought to influence then-current events, the full impact of the [Snowden] disclosures is oriented toward the longue durée and the extensive digital archive of American global panopticism that will be preserved in posterity.(Follis and Fish, 2017)

In fact, a major omission in the Snowden publication strategy was precisely that there was no provision made for producing a searchable archive to ensure that once documents were put into the public domain, they remained accessible after individual news stories had been and gone. Compounding the problem was that extracts from the Snowden archive were not published in a consistent way that allowed readers to easily connect insights to individual documents, a situation exacerbated by a degree of inconsistency and repetition within the archive itself.[4]

In other words, news values dominated entirely over the interests of researchers, or even those with a professional interest in, say, mitigating the impact of National Security Agency (NSA) operations against the Tor network or commercial infrastructure. Individual stories were timed for maximum political—and sometimes disruptive—impact. To take two examples from June 2013, the publication of Presidential Policy Directive 20 made public America’s offensive cyber warfare ambitions on the eve of a summit with China (Greenwald and MacAskill 2013). The revelation of NSA operations against universities and other institutions in Hong Kong bolstered Edward Snowden’s personal position at a time when his extradition from the territory was still a possibility, bringing out protesters in his defense (Lam 2013). Despite this, no provision was made for collating stories in one place, still less producing an archive of source documents. The two full-text search engines that do exist were created by third parties independent of the publication process on the basis of open-source research.

If the Snowden revelations have had a longstanding impact, it was due to the momentous and specialized nature of their content, rather than a publication strategy intended to maximize the ability for nonspecialists to generate insights into the documents after the news cycle had moved on.

Although the presentation of documents has improved markedly since the first Snowden revelations were published in mid-2013, the experience shows a continuing need for agreed publication standards for contentious document sets.[5]

In contrast to the Snowden revelations, the DNC and Podesta emails, which were published in stages from July 22 to October 7, 2016, respectively, were published in searchable form from the outset. Fish and Follis characterize these as “fast” releases that defied comprehension; what this misses is that the context into which they were released is key.

In contrast to the Snowden revelations, the DNC and Podesta emails, which were published in stages from July 22 to October 7, 2016, respectively, were published in searchable form from the outset. Fish and Follis characterize these as “fast” releases that defied comprehension; what this misses is that the context into which they were released is key.

The Podesta emails in particular were published in the middle of a particularly acrimonious and negative election campaign. Elections form a very particular kind of information environment: fast-moving and elaborately choreographed with a disproportionate emphasis on gaining short-term advantage. One of the few points of concurrence in the voluminous political science literature on election campaigns that the impact of “shocks” and individual campaign events tends to decay quickly (Jacobsen 2015).

It is precisely this short-termism that makes what election consultant Lynton Crosby is reported to have called the “dead cat on the table” strategy viable: campaign timetables move so quickly that it is rarely necessary to “win” an argument on a factual basis to seize attention from your opponent. In fact, putting together a coherent argument is an inefficient strategy when a calculatedly lurid non sequitur will serve just as well.[6]

Assessment of the ultimate impact of the DNC and Podesta emails on the U.S. presidential election will have to be left to subsequent researchers, but the episodic nature of their release, their capacity to be searched for new insights, and their resonance with already latent concerns about the Clinton candidacy meant they were not “fast” in the context of the election campaign, as Fish and Follis would have it. In contrast with the various leaked stories that were published by the New York Times and Washington Post, the DNC and Podesta leaks were, in fact, subversively slow.

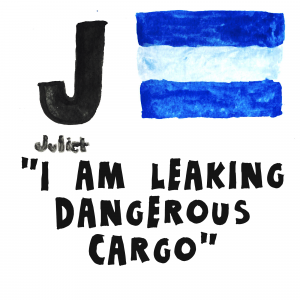

In fact, compared with stories appearing in a similar context, leaks are generally “slow,” and the greater the opportunities for public engagement, the slower they are likely to be. Precisely because they have a long half-life and can be interrogated by nonspecialists, leaks accompanied by publicly accessible archives also deprecate the role of traditional gatekeepers. For Fish and Follis, the importance of deeming the DNC and Podesta releases “fast” is to convey the sense that information entered the public domain with such rapidity that it effectively defied rational analysis, leading to the proliferation of conspiracy theories.

Molly Sauter, in another essay in this issue, makes a related argument using the same case study, specifically that leaks of emails by outside parties are liable to become fruitful ground for conspiracy “without the initial interpretive intervention of mainstream journalistic entities.”

Sauter argues that although mainstream journalists did not ignore the Podesta emails, their episodic release “extended the drama of revelation and surprise” wherein “journalists lost their interpretative authority.” This is deemed problematic because individuals without journalistic expertise and those with existing ideological standpoints are liable to mistake the “illicit aura” of exposed intragroup communications for the public interest. In distinguishing between leaks that emerge from within organizations and others, Sauter implies that this misapprehension about what constitutes the public interest applies as much to the sources of stories as it does to their readers.

There are a number of objections to make to these lines of argument. First and foremost, pizza-themed conspiracy theories were clearly neither the dominant nor the most politically relevant narratives to emerge from either set of emails, which were covered extensively by major media organisations. The initial publication of the DNC emails came on July 22, 2016, and the Podesta emails on October 7. Within 24 hours, mainstream outlets identified the DNC’s conduct during the primary campaign and Hillary Clinton’s paid speeches as the most significant content of each release. (Chozik et al. 2016; Shear and Rosenberg 2016). Controversies about the publication of the document sets ran alongside these reports, but it is simply wrong to assert that the substantive content of the releases was ignored.

Neither is it the case that the 2016 email releases failed to inform substantive analyses beyond the immediate context of the election itself (Sifry 2017). Observers have noted that the New York Times, if offered the DNC material, would likely also have chosen to publish the material in some form (Goldsmith 2017). Controversies about the ultimate sourcing of the material and partisan concerns aside, the assertion that there was no public interest justification for the releases seems misplaced.

So far, so typically leaky. The second, more interesting line of argument is that readers are dependent on journalists to properly interpret the content of disclosures. While the information in some archives—the Snowden documents, for instance, or some of the financial disclosures coordinated by the International Consortium of Investigative Journalists—may present technical obstacles for nonspecialist readers, the gist of the Fish and Follis and Sauter articles is that readers are liable to misinterpret documents produced in ordinary professional contexts, either through an inability to parse large quantities of information or a misapprehension about what is newsworthy.

What these arguments miss is that most of the major contemporary leaks have seen the professional and nonprofessional spheres working in tandem. The accessibility of source material to the public, combined with the common presence of interested parties (journalists, subject experts, readers) on social media, has produced a powerful dynamic of parallel scrutiny wherein the two spheres inform, criticize, and check the excesses of each other. This is a change of real significance: the formation of mainstream narratives is no longer the closed process it used to be when readers’ main route for response was the newspaper Letters to the Editor pages.

To return to Chelsea Manning’s disclosures for a moment, it is easy to forget how reporting on the State Department cables proceeded. The initial wave of mediated reporting via WikiLeaks’s major media partners El Pais, Le Monde, Der Spiegel, The Guardian, and the New York Times began on November 28, 2010. Among substantial stories such as Saudi lobbying of the United States to take a hard line toward Iran and the United States pressuring other countries not to pursue extraordinary rendition cases through their domestic courts, Cablegate’s first 24 hours included a host of stories about U.S. diplomats’ less-than-flattering descriptions of world leaders (Chen 2010).

TuniLeaks run by Nawaat

Based on that initial wave of reporting, opinion pieces were written predicting that the political impact of the cables’ release would be limited, aside from inadvertently reinforcing the status quo. It was only a week after that this was comprehensively refuted when cables about Tunisia, distributed by Lebanese paper Al Akhbar and the TuniLeaks website set up by nawaat.org, became a rallying point for local activists, helping to spark off the Arab Spring and the global wave of democratic revolts that followed.[7]

This almost unprecedented popular energy was reflected back in crowdsourced activity around the searchable cable archive, which both collated and criticized the output of major media on blogs like WLPress and WikiLeaks Central, and sought to locate, discuss, and publicize unreported stories under the hashtag #wlfind. One of the major stories to come out of the archive was actually located this way by independent journalist Kevin Gosztola. The discovery of a previously secret report on U.S. war crimes in Iraq written by the United Nation Special Rapporteur on Extrajudicial, Summary or Arbitrary Execution, was duly picked up by others and led directly to the Maliki government refusing to renew U.S. troops’ immunity from prosecution.[8]

The checking function works in the other direction too. In August 2012, crowdsourcing on another WikiLeaks release, the Global Intelligence (GI) Files, a collection of emails drawn from the hack of private intelligence firm Stratfor, identified a surveillance system purchased by a selection of U.S. public authorities called Trapwire. A great deal of momentum built up online about Trapwire, which had not featured in any of the professional reporting on the GI Files. Speculation about Trapwire’s capabilities was combined by a growing frustration that mainstream journalists were not picking up the story. Intense lobbying of reporters on social media went on for several days.

This interaction paid dividends, although perhaps not quite in the way the crowd tweeting about #trapwire envisaged. The checking function provided by those who had seen a few overblown Homeland Security salespitches before resulted in a story about petty corruption and cronyism in the security industry rather than advanced secret surveillance capabilities (that story was to emerge 10 months later; Shachtman 2012).

The Trapwire episode offers a direct response to Molly Sauter’s concerns about “unmediated” leaks: interpretation is a two-way street. The initial crowdsourced reaction to the raw information in the GI Files may have been mistaken but, without the pressure of the crowd, what turned out to be a rather revealing story about how the industry works would have been missed entirely. Had the professional reporting community not acknowledged the newsworthiness of issues identified by their nonprofessional counterparts—despite initial resistance—the two communities could well have become profoundly alienated from each other. There are probably lessons to be learned here.

At a rather fundamental level, both the Fish and Follis and Sauter accounts of the Podesta emails are skewed by a profound unease about the results of the 2016 U.S. presidential election and speculation that Russian state actors may have had a hand in their sourcing. Should we be having second thoughts about the wisdom of anonymous leaks? Has there been a fundamental change that makes it naive to be drawing parallels with the halcyon days of 2010–2012, when leaks felt like a more straightforwardly emancipatory proposition?

Moments of high drama have a way of drawing concerns and reservations from those who are otherwise sympathetic to the case for disclosure. Long-term critic of government secrecy Steven Aftergood explained his reservations about WikiLeaks just as the Manning disclosures were beginning back in 2010. Three years later, NSA whistleblower Bill Binney’s initial reaction to Edward Snowden’s revelations was also qualified, if generally supportive.[9]

The DNC and Podesta email disclosures are not outlier events that bear no relation to the leaks that came before them, and it’s important not to lose sight of those continuities. Parallel scrutiny, too, has not disappeared: as I write this article, lively debate is ongoing about the content and presentation of WikiLeaks’s #Vault7 release of CIA malware. Nevertheless, I do share some concerns about the environment for leaks in 2017.

A limitation of parallel scrutiny is that it requires some kind of common forum to operate properly. In that regard, the development of self-contained “distinctive and insular” media ecosystems that limit the opportunity for encountering a broad range of dissenting views is potentially problematic (Benkler et al. 2017). In addition, researchers have found respondents with high degrees of political knowledge and low levels of trust in established institutions to be especially prone to the kinds of motivated reasoning that are often labeled as conspiracy theories. The concerns that preoccupy Fish and Follis and Sauter are closely related to these dynamics (Miller et al. 2016; Nyhan 2017; Swift 2016).

Neither an appeal to authority—as Sauter suggests—nor Fish and Follis’s recommendation of publication strategies that align “time and scale” will be sufficient to resolve this situation. A central difficulty is that although some conspiracy theories might appear irrational, it does not follow that a diminished degree of trust in established institutions is also irrational. The emergence of insular and alienated information communities reflects a endemic political problem that is not restricted to the public sphere.

Leaks have become politically important because, at a time when trust in institutions is collapsing across the board, they represent a rare instance of elite power being dissipated in a way that has genuinely broadened participation and brought with it surprisingly large social benefits. Without the parallel scrutiny of journalists, experts, readers, and researchers, Cablegate would not have been the phenomenon it was: journalists alone would not have been able to generate anything like the same world-changing, emancipatory impact. The practice of journalism has changed as a result for the better (Benkler 2013).

It seems strange to have to assert that increasing access to knowledge is more likely to present benefits to society than not, but that appears to be the state of the debate in 2017. The shock of the Trump vote, and the Brexit vote, has produced an understandable hunger for explanation, accompanied by a crisis of intellectual confidence.

Journalistic practice is undergoing a period of radical upheaval in the digital age and leaks are a major part of the process whereby the formation of narratives has been opened up to wider scrutiny. Aspects of 2016’s agenda will inevitably give pause to those who closely followed the contours of Cablegate, but scholars of these trends must take care not to confuse cause and effect. The development of isolated information communities has not been caused by leaks, but it has made clearer some of the social and political problems that have been coming to a head since 2008. Not least of these is a widespread sense of institutional failure and corresponding alienation from conventional political narratives.

Leaks, particularly when accompanied by public access to source material, have provided some of the few instances where that divide has been successfully negotiated. Those who misidentify leaks as the problem therefore run the risk of embracing deeply anti-democratic norms. Without a reality check, this could become self-perpetuating.