Introduction: Daily bread: Throughout recorded history, food has been one of the most enduring limitations shaping the template and tenor of humankind’s daily life. Finding it, securing it, and ensuring its future potential so dominates the history of civilization that has come to define it. “The countryside lived off its harvest and cities off the surplus,” Braudel writes, and so it was that the geography of civilization’s expanse had been marked by the security of sustenance close at hand, guided from its earliest days by codes outlining the laws of consumption, the ready recipient of technological marvels to shape the form of food in the centuries to come. While the full reach of this history would stretch to monumental proportions, the modern moment can be narrated, in part, as a new era of consumption. Tumultuous change unearthed the foundations of agriculture in search of new stores of plenty the same moment that voyages set out in search of the expansive diversity waiting to be found in exotic ports of call. The impacts of edible productions in the nineteenth and twentieth centuries are given by this legacy, with phenomenal increases in not only quantity and quality, but in the very structures now erected to govern movement, exchange, and consumption. Not merely the technological changes in the mechanics of production altering the material nature of food, but the new forms of information shaping precepts of understanding about it. While Braudel suggests that Europeans had possessed little of the culinary sophistication found in the great civilizations of the world, the twentieth century coincides with their seeming domination of its culture. Ideas of exchange, production, and consumption splashed out of the bubbling cauldrons of cosmopolitan colonization and inadvertent industrialization—a recipe for the material and mental infrastructure of food in the centuries to come.

The timeline that follows charts the history of this infrastructure from the early moments of modernity, within the particularly limited context of the West, to explore the themes raised in this issue while supplementing them (when appropriate) with observations from the broader histories of food and nutrition.

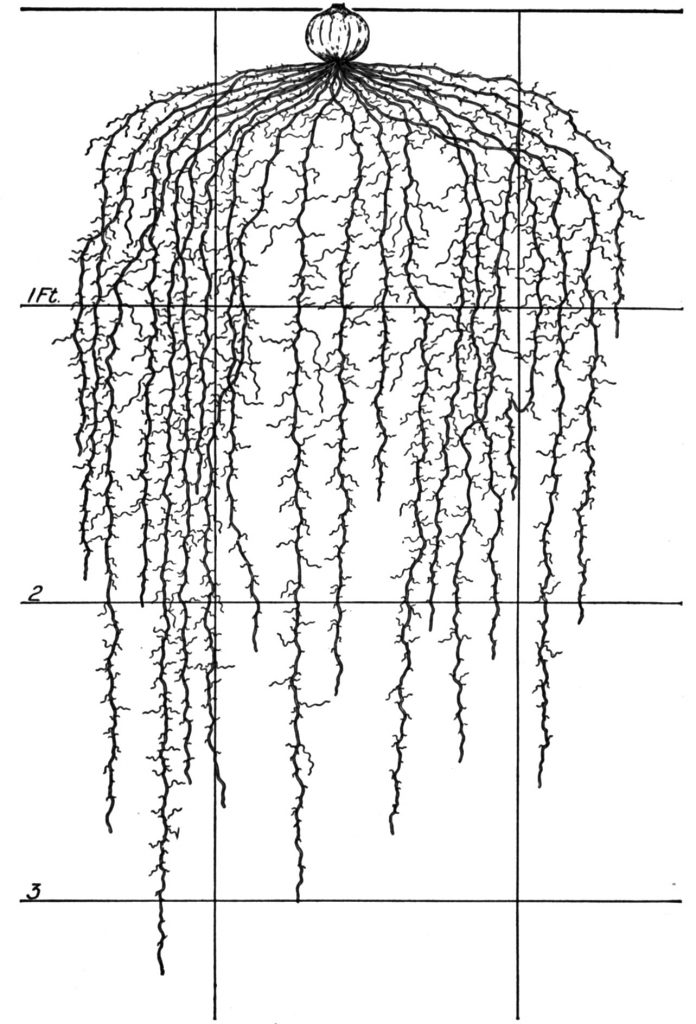

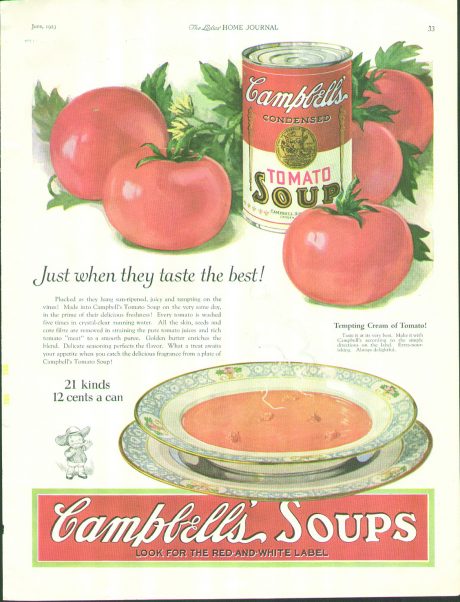

1500s: A Grand Exchange: Following the European encounters with the New World at the beginning of the sixteenth century, the environmental, botanical, and agricultural landscapes of the continents become each irrevocably altered by knowledge of the other. Alfred Crosby’s description of this “Columbian Exchange” narrates the widespread transmission of animals, plants, cultural practices, human populations, diseases, technologies, and ideas between the American and Afro-Eurasian hemispheres. In the course of these encounters Europeans witnessed the first arrival of New World plants like maize, tomatoes, potatoes, vanilla, cocao, and tobacco as they brought familiar (and unfamiliar) species of the Old World—apples, onions, and wheat, but also coffee, citrus, bananas, and rice—to the fertile shores they had found. The impact of exchange over the course of the century would reshape not only culinary identities, but the very shape of the world surrounding them.

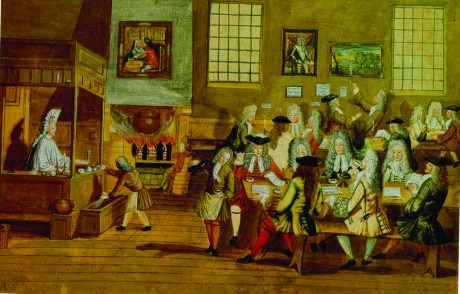

1650s-1680s: A Taste for Luxury: The first coffeehouses spread from the Muslim world (through commerce with the Ottoman Empire) to major centers of European and Neo-European trade, cities like Venice, Paris, London, and Boston. The centralized demands of coffee production produce sites of social interaction and intellectual exchange. Around the same time, tea is introduced to England through the marriage of Charles II to the Portuguese princess Catherine of Braganza. Commerce with New World colonies provided increasingly reliable reserves of the plants of discovery, while the constant search for profitable quantities of exotic consumables like coffee, tea, sugar (with products derived from it, like rum), and spices, laid the groundwork for an unprecedented scale of world trade.

“Interior of a London Coffee House,” 1668, Pim Reinders, Thera Wijsenbeek et al., Koffie in Nederland.

1730s: Viscount Charles “Turnip” Townshend introduces English farmers to the “new agriculture” (the four crop rotation system) that he had observed in use in Flanders. His arguments towards agricultural reform meet with amusement, but prove influential in the future development of the British food supply.

1747: The Scottish surgeon James Lind discovers that citrus provides protection against the scurvy afflicting sailors on long voyages at sea. His recommendation of a lemon and lime ration is soon adopted by the British Royal Navy.

1750s: The French colony of Saint-Domingue (in present-day Haiti) becomes the largest source of sugar in the world. The demand for sweet sugarcane fuels powerful demands for labor to cultivate it. Over the course of the century nearly one million slaves are brought to work in sugar plantations.

1798: The reverend and political economist Thomas Malthus predicts that the increase in living standards over the past century would come undone as population growth began to outstrip national capabilities for food production. His predictions of a future “Malthusian Catastrophe”—dramatic collapse forcing a return to subsistence—casts a long shadow over the continued growth of the human population.

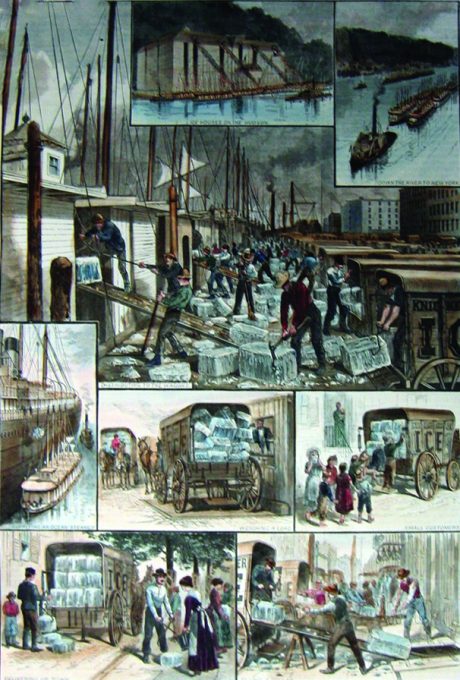

1799: The first significant ice shipment in the United States is cut on the cobblestones of New York City’s Canal Street before leaving for customers in Charleston, South Carolina.

1803: The Cold Chain: While the application of heat is inexorably tied to the ancient evolution of consumption, the earliest reaches of civilization were equally invested in methods for its removal. If “catching fire” is tied to the movements of early man, then “keeping cold” seems a hallmark of grand civilization. The Chinese, Hebrews, Greeks, and Romans all stored snow and ice under insolation for use in cooling drinks and medicines. In southern Europe the chill of night could produce refreshment for the hottest part of the morning, but it was not until the seventeenth century that the French began using saltpeter and long-necked bottles to produce effects contrary to their climate. Frozen juices and icy liquors became popular, if ephemeral, delicacies, constrained only by the infrastructure available to transport them. But while traditional methods of preservation—salting, spicing, smoking, pickling, and drying—remained predominate before the 1830, the trade in ice grew considerably. Maryland farmer Thomas Moore first introduced the term refrigerator in an 1803 patent to describe the site of artificial cooling, and while consumer refrigeration would not become mainstream until the middle of the twentieth century, the techniques soon began to infiltrate in the meatpacking and brewing industries. By 1914 nearly all American plants made use of mechanical refrigeration (often ammonia compression) as part of their trade.

1809: Tin Can Archaeologies: While the movement of man had always been limited by the stability of his sustenance—and though the furnishing of feed and fodder had universal implications—on the field of battle it often dictated the fortunes of war. Bound by the umbilical cord of supply, armies depended on provisions sourced long in advance or ploddingly foraged by local scouts. When the nineteenth century left Europe engulfed in calamity borne by revolutionary fervor, the pace of Napoleonic expansion left little recourse to lengthy negotiations. To maintain the economy in the face of trade blockades and embargoes, prizes were offered for exceptional innovations. In hopes of one, confectioner Nicholas Appert developed a means of keeping foods fresh without relying on foraging. Sealed within glass bottles, and with the application of heat, was not only a method for the preservation of meats, vegetables, fruits, and milk, but a mystery largely unexplained until pasteurization. As Appert collected his 12,000 franc prize in 1809, across the channel the British merchant Peter Durand was packing the approach into a more familiar form. Setting sail with boiled meats and soups sealed in his tin coated iron canisters, the Royal Navy was stocked with the future fuel of expansion, conflict, and colonization—rested on steady supply from, and across, the whole of the earth.

1810: Service à la russe enchants Parisians with the formality of courses served in continuous succession. Credited to the Russian ambassador Alexander Kurakin, it is recognizable as the style of service still seen in contemporary Western cuisine.

1813: Bryan Dorkin and John Hall set up the first commercial canning factory in England based on Durand’s model, iron canisters offering fewer risks during transportation and storage at sea.

1810s-1820s: Canned goods become staples of consumption for Britain’s distant armies and agents, expanding the reach (and success) of exploration and colonization. Admiral Parry takes canned meats for exploration in the arctic. The instructions indicate “cutting round on the top near to the outer edge with a chisel and hammer.”

1819: Commercial canning expands in America in the persons of William Underwood in Boston (primarily fruit) and Thomas Kensett in New York (mostly seafood). Initially using glass containers, they switch to the “tin” cans at the beginning of the 1840s.

1826: The Reverend Patrick Bell invents the mechanical reaping machine in Scotland. Machines like the corn reaper (introduced by Cyrus McCormick to Virginia in 1831) and the combine harvester (Hiram Moore in 1835) radically reduce the human labor necessary for agricultural production, allowing large populations to depend on the efforts of a small fraction of farmers.

1830s-1840s: The German chemist Justus von Liebig conducts his research into the study of plant chemistry and its relationship to agriculture, work for which he becomes known to history as the “father of fertilizer.”

1840s-1860s: Initially manufactured by hand, limited forms of mechanization are applied to canisters and bottling operations at the start of the American Civil War, resulting in an annual output of nearly thirty million cans by the conflict’s close. Canisters used as wartime provisions tend to be drab and utilitarian, but consumer goods become marked by the attraction of lithographs printed directly on the tin surface.

1864: The experiments of the French chemist, Louis Pasteur, explain the principles behind the rapid application of heat in preservation and its effect on limiting the growth of the microorganisms responsible for spoilage. Canister production shifts from the hole-and-cap design to the sanitary (open-top) model—so-called because the solder is confined to the outside of the container.

1867: J.B. Sutherland’s patent for the refrigerated railroad car (demonstrated as early as the 1840s) standardizes long distance transport of perishable goods. The frozen railway produces regional specialization in produce and meat, even as it disseminates fresh foods like eggs, butter, milk, and cheese throughout expanding (and increasingly distant) urban markets.

1871: Crimes Against Butter: When the New York based United States Dairy Company began production of colored oleomargarine as a kind of “artificial butter” in 1871, dairy producers took their concerns to Congress. But the response, an excise tax and the requirement that margarine producers be licensed, was not be enough—by 1902 over thirty states had passed outright bans on the sale of colored oleomargarine and scores of violators had seen the inside of federal penitentiaries for unauthorized production. While the Oleomargarine Act of 1886 would be reversed seventy years later, its passage demonstrated the full power of the government to regulate and control the production of food.

1876: The red triangle of the Bass brewery becomes the first trademark registered under the Trade Mark Registration Act. Within fifty years marks adorn the world’s most recognizable articles of consumption.

1877: Charles Tellier uses the refrigerated ship Le Frigorifique to transport meat between Buenos Aires and France as Europe begins to rely on frozen meats imported from the substantial surpluses in its colonial strongholds. By 1910 over half a million tons of frozen meat are brought to Britain alone.

1878: Saccharin is discovered by chemist Constantin Fahlberg when he notices a sweet taste on his hand one evening after his work on coal tar derivatives. While the substance is commercialized within only a few years, it is not until World War I (and the sugar shortages accompanying it) that saccharin becomes widely available.

1879: There are now 35 commercial ice plants in America. By 1909 the number would climb to 2000 plants producing over 15 million tons of ice.

1885: Colonel John Pemberton registers his coca wine nerve tonic, the non-alcoholic formulation of which would become recognizable throughout the world as Coca-Cola.

1891: The Madrid Agreement reserves the use of the word “champagne” for the sparkling wine produced in that region, an arrangement upheld in the Treaty of Versailles thirty years later. Countries throughout the world continue to secure legal protections for the provenance of food and wine as part of national and cultural identities, even as the global reach of production both elevates their stature and reworks the protectionist trades which produce them.

1894: J.H. Raymond uses the calorie (specifically the kcal), a measure of necessary heat, in his discussion of human energy needs.

1897: Vegetarian and Seventh-day Adventist, Will Kellogg, promotes cereals like corn flakes as a healthy breakfast food at a time when the poor ate porridge and the rich made do with eggs and meat. The Sanitas Food Company he founded with his brother John grew out of recipes developed for the Battle Creek Sanitarium, forming the Battle Creek Toasted Corn Flake Company in 1906 as the forerunner to the modern Kellogg Company.

1900s: The necessity of tin as a mechanism for sealing and soldering binds the fate of the canning industry to the sources of the metal—a fragile link during years of global crisis. The soft metal brought from Malaysia’s tin houses and Indonesia’s tin sands collects with it all manner of substances and substrates. Mechanized methods for tinning found such a co-constituent in the palm oil brought from Africa for Europe’s soap, candles, and textiles. Useful as a lubricant since the industrial revolution, it proved invaluable in the processes preserving the universal preserver, leaving the creation of canisters reliant on geographies and cultures as exotic as the luxury imports carried within them.

1900s: Americans are captivated by the healthy eating theories of Horace Fletcher, whose strictures arguing (among other things) that food should be chewed approximately thirty-two times before being swallowed earn him the nickname “The Great Masticator.” Despite the implausible reach of his claims, he managed to instill in his adherents a strong desire for knowledge over the composition of the food they were to consume.

1901: Sixty independent companies within the previously diverse and decentralized canning industry merge into the massive conglomerate, American Can. Despite disruption, the canning industry will find unprecedented scales of demand in the aftermath of the First World War.

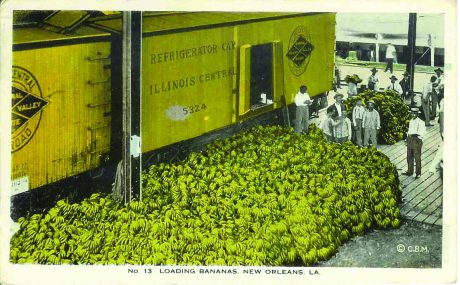

1902: Reefer ships are introduced by the United Fruit Company for the transport of bananas, with limited refrigeration to ensure that the profitable delicacy can make it to market before completely ripening.

1905: William Fletcher discovers that eating unpolished rice prevents beriberi (now recognized primarily as a deficit of the vitamin thiamine), suggesting something present in the outer hull beneficial in fighting the disease. Seven years later Casimir Funk isolates the micronutrients that Fletcher had identified, proposing that they be named “vitamine.”

‘Dropping hides’ and ‘splitting chucks’ at the Beef Department, Swift & Co.’s Packing House, Chicago.” 1906, H. C. White Company.

1906: Pigs’ Lips and Assholes: Strictures governing the consumption of food have existed nearly as long as humanity has been in the business of eating it. But outside of the observations of tradition, both secular and religious, the history of legal regulation was relegated to the permissibility of certain kinds of people to possess certain kinds of food—within the context of overriding issues of trade, privilege, and pure availability. Outside of blatant acts of fraud (the thirteenth century Assize of Bread prohibited English bakers from mixing peas and beans into bread dough, for example) a sausage, once assembled, was like any other sausage. When Upton Sinclair’s publication of the Jungle in 1906 brought not, as he had hoped, empathy with the plight of the immigrant worker, but public furor over the unsanitary conditions of food production, it opened a century of constant investigation into the composition of food. Grounding Richard White’s assertion that the most interesting parts of the pathways between humans and their industrialized landscapes are those which lead back to the body, the idea of health (and as the hygienists had argued a half century before, cleanliness) as unifying barometer of the symptoms of modernity demanded an awareness of ingredients, quantity, and kind. The first regulations tended to be piecemeal—limited to issues that had provoked consumer outrage—and the introduction of food labels in the 1940s (following the 1938 Food, Drug, and Cosmetics Act) remained limited to calorie and sodium content on foods considered for “specialized” dietary uses. But as the reach of labels expanded in the name of consumer rights and information, citizen-consumers soon found themselves at the center of a continuing struggle over who would have the authority to offer information—even as they found that they would be the ones made responsible for managing it.

1906: Coinciding with the Food and Drugs Act’s prohibition of interstate commerce in adulterated foods, public outrage prompts the introduction of the Meat Inspection Act specifically to monitor that industry.

1910: German chemist Adolph Windaus uncovers the first evidence that the plaque found in human aortas contains significant concentrations of cholesterol.

1912: The inventor Otto Frederick Rohwedder begins work on a machine to slice bread. While initially reluctant, bakers are convinced by his 1928 development of a machine to both slice and wrap, as a history of artisanal production reaching back to the beginnings of agriculture gives way to industrialization.

1912: Frederick Hopkins’s experiments with rats suggest that some foods, such as milk, contain “accessory food factors,” of which minute quantities are necessary for normal growth. Further research conducted by Lafayette Mendel and Thomas Osborne, and Elmer McCollum and Marguerite Davis, suggests the presence of two factors eventually recognized as vitamin A and vitamin D.

1912: Grocers in the Country Village, Grocers in the Great Town: Between the period when individual commerce in food became necessary and the beginnings of the early twentieth century, the practice of purchasing underwent only incremental change. In rural life customers might negotiate directly with the farmer, but in the city the ritual of cooking was proceeded by a visit to specialists capable of offering specific consumer commodities. Meat from the butcher and bread from the baker meant a proliferation of these small shops, each with limited inventories and high costs governed by haphazard management and fed by a chaotic assortment of jobbers and middlemen. The Great Atlantic & Pacific Tea Company (A&P) had begun as a mail order tea business in the middle of the nineteenth century. When it moved to systematic grocery distribution in 1912, its “economy store” brought standardization—and scale—through vertical integration over a food distribution chain reaching to the trucks, warehouses, and factories. This leverage allowed it to introduce modern accounting practices and scientific management to a stagnant industry, moving individual enterprises based on credit and custom to national chains fed by anonymous cash and carry.

1913: The Lipid Hypothesis: Long before the contents of consumption could be carefully constructed and catalogued, outlined in labels, and debated in the annals of medicine, popular magazines, and daytime television, the mechanisms of digestion had been open to rumination. Ancient practitioners had some understanding; Galen saw the stomach as an animate agent of the body, one which could feel emptiness and stimulate consumption. It was a storehouse, and the site of first digestion, where the proverbial wheat was cut from the chaff. But it was the liver, and its perceived role as the originator of veins and of the formation of blood, to which Galen gave the most emphasis. It was here where digested food was transformed into the sluggish ooze which spread throughout the veins to carry nutrition and nourishment to the body. Over the early decades of the twentieth century chemical isolation of vitamins and nutrients began to emphasize the fragile connections between nutrition and human health. But when Nikolay Anichkov produced atherosclerosis in rabbits by feeding them cholesterol in 1913, the first intuitions of the perils which coincided with the power of this fluid began to trickle into the work of scientists and doctors exploring the role of nutrition in human health. Initially regarded as “not relevant” to human disease, confirmation of the hypothesis seventy years later transformed what had been a fundamental fact of life into a condition that could be both predicted, and prevented.

1916: The Piggly Wiggly opens in Memphis, Tennessee as the first self service grocery store.

1920s: Monoculture: In the early 1920s bananas had become the first exotic fruit commonly available to Americans—with numbers rivaled only by the apple. Like sugar and coffee before it, the construction of demand for the exotic import required the wholesale reconstruction of its distant ports of origin—a near complete reconfiguration of places like Honduras in the central and southern reaches of the continent by emergent multinationals like United Fruit. Fearing the customers would only tolerate a particular kind of banana, and in search of a perfectly packable product, producers terraformed the region in favor of Gros Michel monocultures. The price was incalculable environmental change, a fruit susceptible to rapid outbreaks of disease, and an insatiable demand from the West.

1921: The refrigerator slowly becomes a household fixture, with over five thousand manufactured in the United States alone. With its mass production following the Second World War, some ninety percent of homes would be counted on to own one of the devices.

1922: The New York Times reports that Elmer McCollum and his team had “captured a hitherto unknown vitamin,” which had been labeled Vitamin D. The findings confirm the preventive and therapeutic value of cod-liver oil and sunlight for the condition of rickets in children.

1924: The Supreme Court expands the reach of the Food and Drug Act to prohibit any statement, device, or design intended to mislead or deceive. Similar expansions of statutes over the following decades continue to provide increasing standards for contents in particular (if arbitrary) products.

1928: Frigidaire patents chlorofluorocarbons (CFCs) as a new kind of synthetic refrigerant. Released to the market in 1930, they become wildly successful, even as their widespread use in consumptive packaging will be found to have devastating environmental consequences in the decades to follow.

1929: Clarence Birdseye’s development of the double belt freezer leads to the introduction of the first line of frozen foods targeted to the general public (under the freshly formed Birds Eye Frozen Food Company). The offerings included meats, vegetables, fruits, fish fillets, and oysters. Retail acceptance is limited, with many stores reluctant to invest in cold food displays to market the products.

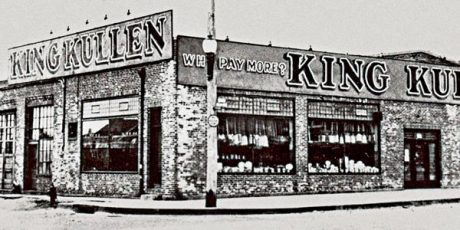

1930: The Center Store: As broadcast media continued to reach into the home (and, consequently, the kitchen), businesses found new opportunities to exploit the national consumptive sensibility they had fostered. Michael Cullen, then an employee at the Kroger Company, developed a plan for a new kind of self-service store which could take advantage of the popularity of nationally branded goods—forming his own store, King Kullen, to see it through. But it wouldn’t be until after the Second World War that the form, and the developments it had pioneered: customers serving themselves with little assistance, grocery carts to encourage bulk purchasing, and parking lots to leverage expanding automobile use, would find widespread acceptance. As the decades progressed, this “center store,” loaded with aisle after aisle of packaged national brands, grew to encompass a periphery stocked with more traditional purveyors—greengrocers, butchers, and bakers—leaving behind their independent establishments to take their new place in the future of food retail.

1930s: The grapefruit or “Hollywood Diet,” which had advocated eating half a grapefruit with every meal, becomes one of the first fad diets to receive widespread interest. It continued to experience popular resurgences, most notably in the 1970s.

1934: The Seven Seas company is formed by a group of British trawler owners to exploit the by-products of their industry (namely cod-liver oil) to a public increasingly concerned with supplementary forms of the vitamins and nutrients isolated over the past two decades.

1937: The Hormel Food Company introduces SPAM, with a name it derives from “shoulder pork ham.”

1938: The Food, Drug, and Cosmetic Act lays the foundation for information about food in the United States by requiring that standards safeguard consumer value. Ensuring that labels were to feature their common or usual name, it offered that food would be “misbranded” if it represented itself as a standardized food and then failed to conform to what had been given as that agreed standard—be it quantity, quality, or kind.

1938-1939: Investigation into a genetic disorder (familial hyper-cholesterolemia) suggests further links between cholesterol, the level of atherosclerosis in patents, and coronary artery disease. Research continues to explore the mechanisms through which the body produces and maintains cholesterol—and the possibility that foods considered high in cholesterol could be responsible for increased risk of heart disease and related aliments.

1940s: Japan’s control of southeast Asia during the Second World War imposes severe restrictions on worldwide supplies of tin. Frozen foods, packaged in paperboard, cellophane, and wax paper, fill shelves left empty by dwindling reserves of canned goods. Following the end of the war, the introduction of frozen concentrated orange juice heralds an era of expanding interest in the trade of food commodities in an increasingly international market.

1941: Physiologist Ancel Keys is tasked with the design of a non-perishable ready-to-eat meal for the United States War Department in the Second World War. The result (dubbed the K-ration) is a densely caloric compliment of foodstuffs that, while intended only as a short-term emergency assault ration, soon becomes a regular provision for soldiers entering combat.

1947: Raytheon produces the “Radarange,” the first commercially available microwave oven. It is nearly six feet tall, weighs over seven hundred pounds, and costs $5,000.

1950: Revision of the Oleomargarine Act inaugurates an era of increasingly widespread (and mandatory) labeling requirements, exchanging a federal tax on the substance with the requirement that colored oleomargarine be prominently labeled to distinguish it from butter.

1951: John Gofman begins work on the theory that cholesterol-carrying molecules in the blood can serve as predictors for the risk of heart disease. In response, Helen Gofman and others at Berkeley publish what is possibly the first diet heart-centric cookbook.

1952: The introduction of the TV dinner (Swanson & Sons sold over 5000) brings a complete meal, albeit one in frozen form, for families looking to dine quickly at the table or in front of the television. As items like fish sticks and frozen pies meet with consumer acceptance, companies rush to capitalize on the rapidly growing market.

1955: Comparative observational studies reveal a correlation between saturated fat intake and the rate of fatal heart attack in national populations the same year that Ray Kroc opens a franchise of one the MacDonald brothers’ popular restaurants. His buyout of the company in 1961 transforms the “simple hamburger joint” into one of the largest and most recognizable restaurant chains in the world.

1958: Cranberry Crisis: The Delaney Clause, named for the then Congressman from New York, amends the Food, Drugs, and Cosmetic Act such that “the Secretary of the Food and Drug Administration shall not approve for use in food any chemical additive found to induce cancer in man, or, after tests, found to induce cancer in animals.” The application of the clause to pesticides and herbicides in processed foods was invoked the following year, when the public was advised that cranberries produced in Oregon and Washington may have been contaminated with the carcinogenic herbicide aminotriazole. The cranberry scare of 1959 saw Thanksgiving sales plummet, one of the first modern food scares prompted by the presence of a chemical additive.

1960: The rise of home refrigeration and expanding access to supermarkets begins to have serious consequences for the milkman. By the early 1960s home delivery of milk had fallen into a slow decline from which it would never recover.

1961: Theories that a diet low in saturated fat could limit the risk of heart disease take hold within the medical community, with the American Heart Association cautiously suggesting that patients who had experienced heart attacks or strokes could reduce recurrence. By the middle of the decade the guidelines are extended to the general public, even as outspoken cardiologists continue to argue that evidence for cholesterol reduction as protection against heart disease remains insufficient.

1961: The Codex Alimentarius Commission (from the Latin for “Book of Food”) is established by the United Nations (and a year later, by the World Health Organization), a global attempt to organize international standards, guidelines, and recommendations relating to foods, food production, and food safety.

1962: Former J. C. Penney employee and businessman, Sam Walton, leverages his success with the low price / high-volume sales model into the launch of the first “Walmart Discount City” store. The company will grow from a regional company into one of the largest corporations in the world, the biggest employer in the country, and one of the most significant forces in food retail.

1962: The search for accessible, and consumer friendly, beverage containers culminates in the introduction of the pull tab. Until the development of non-disposable easy-open tops, it offered the most emblematic and ubiquitous artifact of contemporary consumer culture—by weight and count the most significant piece of litter until the end of the 1980s.

1962: The demands of citizens transform into the rights of consumers, as the rhetoric of what becomes known as the Consumer Bill of Rights enshrines the rights “to safety, to be informed, to choose, and to be heard.”

1962: The Silent Spring: Questions of the environmental impact of contemporary agriculture surface when Rachel Carson’s 1962 publication of Silent Spring draws attention to the widespread use of DDT (dichlorodiphenyltrichloroethane) as a pesticide. Within the decade the compound is banned. It is not the last environmental crisis attached to public perceptions of the increasingly far-flung demands of food production, but it motivates the introduction of more stringent regulations monitoring agricultural point-source waste release, the use of pesticides and herbicides in farming, and the broader implications of industrial (often synthetic) chemicals.

1963: A rash of new diet crazes grip America amidst the introduction of programs like Weight Watchers (as the informal meetings held by Jean Nidetch became known), bringing with them both the capability for community support and a concern for careful accounting.

1965: A rudimentary seed bank is installed by Kew’s Living Collections Division. While modern banks have practical uses (in this case, to support the exchange of material between botanic gardens) they become seen primarily as vital genetic “backups” for plants that are no longer widely used in industrial agriculture or in case of widespread catastrophe.

1968: Paul Ehrlich’s publication of The Population Bomb resurrects the specter of Malthusian calamity, warning of mass starvation and social upheaval in the decades to follow. The alarming account reignites concerns about the growth of the human population, even as it meets with new concerns about responsible stewardship for the future of the planet.

1969: An environmental study is conducted by Coca-Cola to determine the impact of individual container materials. The results prompt the implementation of recycling infrastructure (devolved to the local authorities) allowing the company to realize a 90% reduction in energy used over the can’s lifetime. It is regarded as a foundational program in the history of life-cycle assessment.

1970s-1980s: Market saturation and recession continues to force innovation in food retail, with experimental formats like price clubs (Cosco) and limited assortment superettes (Aldi and Save-A-Lot) gaining popularity. At the same time companies increasingly target natural food markets (Whole Foods Market) and market specialized offerings (Trader Joe’s). It will take decades before they achieve prominence.

1971: Food producers and retailers form the Ad Hoc Committee on a Uniform Grocery Product Code to develop standards in support of a single checkout system. Their work will lead to recommendations for the UPC (Universal Product Code) in 1973.

1973: Soylent Green captures the fears of a dystopian future suffering from overpopulation and resource depletion. Popcorn consuming cinema-goers found themselves captivated by the (probably) fantastical story of a population forced to survive on mysterious food rations.

1974: Counting Codes: The first bar code scanner is installed in a Marsh’s Supermarket in Ohio (wringing up a pack of Wrigley Company “Juicy Fruit” chewing gum as its first item). Within a decade scanners are installed in half of all food retail, and within two decades nearly all. While ostensibly a labor saving device, scanning provided retailers with the ability to automatically track purchases and inventory. This treasure trove of information brought power over producers, fueling increases in turnover, stock diversity, and a plethora of retail opportunities wrought by test marketing, consumer panels, and detailed analytics.

1976: Akira Endo develops a method to block the synthesis of cholesterol, leading to the production of the first statin drug designed to reduce levels of the molecule’s concentration in the human body.

1976-1980: Falling prices of microwave ovens deliver a new mainstay to the consumer kitchen. Coupled with the popularity of frozen foods, microwave meals become a staple of “convenient cuisine.”

1977: Controversy over the popular artificial sweetener saccharin prompts its removal from the FDA’s GRAS (“safe food”) list. Reeling from the ban of the sweetener cyclamate eight years prior, industry strengthens future precedents for self-regulation by pushing the Saccharin Study and Labeling Act through Congress as a public confession of the possibility of carcinogenic effects rather than face an all-out ban of the product.

1980: Food Networks: Electronic data interchange (EDI) had been pioneered in the late 1960s by the transportation industry. Where an overabundance of paperwork was the cost of doing business, they had found in the development of standard formats for their interchange the potential to radically simplify the pathways of exchange. No where else had this been more obvious than in retail. High turnaround in both quantity and kind, the need to track merchandise, negotiate orders, and manage inventory with individual suppliers, along with the perishability of their merchandise, all contributed to a never-ending stream of paperwork moving between hundreds of individual companies. By the early 1970s retailers had already sought out piecemeal solutions to the problem, but with the complex coordination required by the distribution network a more organized approach was necessary. In 1980 the Cooperative Food Distributors of America, the Food Marketing Institute, the Grocery Manufacturers of America, the National-American Wholesale Grocers’ Association, the National Association of Retail Grocers, and the National Food Brokers Association retained A.D. Little to consult on implementations of EDI in the grocery industry. The resulting feasibility report offered concrete recommendations (and necessary actions) for a unified approach to data interchange—the foundational mechanisms organizing the supply and distribution networks of the modern chain grocery.

1980s: In the face of mounting health concerns, the USDA’s “dietary guidelines” bring government oversight to individual consumptive choice in the name of “public health.”

1981: Lean Cuisine is introduced as a healthier alternative to Stouffer’s popular line of frozen meals. While initially offering only ten meal selections, this number climbs to over one hundred.

1981: Of the Flesh of Others: Diets which limited the consumption of animal flesh, and even the other products of human dependence upon them, had been deeply infused into cultural and religious traditions throughout the world. Until recently, however, they had remained firmly intertwined within those legacies, regarded as personal eccentricities, or offered only as the most extreme of medical recommendations. Increasing popularity has coincided with a general expansion in public perceptions on the treatment of animals, advocated (sometimes violently) by the animal rights groups (such as PETA) which burst into the public sphere over the course of the 1970s and 1980s.

1982: Monsanto scientists became the first to genetically modify a plant cell in 1982. Only five years later, the company conducts the first field tests of genetically engineered crops. Within fifteen it will have become the largest seed purveyor in the world, even as questions on the long term viability of agricultural monocultures evolve into scrutiny over the implications of genetically engineered foods.

1984: The lipid hypothesis is confirmed by National Institute of Health trials, underlining the causal link between blood cholesterol and cardiovascular diseases, and recommending that lowering cholesterol should be seen as a national therapeutic goal.

1986: While there are suggestions that the disease had possessed ancient origins—Hippocrates describes a disease afflicting sheep and cattle, as did the Romans—fear of cattle madness (bovine spongiform encephalopathy) takes hold following an outbreak in the United Kingdom. The crisis leads to countries throughout Europe and the world banning the import of British beef for ten or even twenty years, as small numbers of cases continue to surface throughout the continent, Canada, the United States, and Japan.

1990: The Cradle to the Grave: At the first SETAC (Society of Environmental Toxicology and Chemistry) sponsored international workshop in 1990, the term “life cycle assessment” (LCA) is coined. Unlike the point-source regulation which had proceeded it, LCA avoids shifting a product’s environmental burden to other life cycle stages or to other parts of the product system—a seemingly all-encompassing model to catalogue and quantify the myriad impacts of production across all dimensions of a product’s life

1990: The Nutrition Labeling and Education Act universalizes implementation of a nutrition label for all packaged foods, giving consumers the information to take responsibility for nutrition decisions. As a concession to manufactures, the FDA permits some health claims to still appear on packaging (such as “light” or “low fat”) so long as they match agreed definitions.

1994: Angela Paxton publishes “The Food Miles Report: The Dangers of Long-Distance Food Transport” based on the concept developed by Tim Lang. Although not a systematic form of life-cycle assessment, the measures become a consumer-friendly index for representing the global reach of food production.

2000s: Industry labels outside of the prescribed definitions of the NLEA (including, for a time, “organic”) become prominent in marketing materials and packaging in response to consumer interest. Government agencies, lobby groups, and industry cooperatives all jockey to become the arbiter of authority in defining and marketing these newly desirable facets of food production.

2009: Refrigerated container ships now account for the majority of cold cargo, with nearly ninety percent of capacity held.

2010s: Industry initiatives like nutrition keys, simple nutrition shelf tags, and manufacturer and retail seals of approval dominate packaging. The influx of hybrid categorizations of food production, composition, and consumption seems sure to proliferate with uncertain meanings, even as these meanings move to the forefront of consumptive choice and consumer concern.

Foods of the Future: The future of food opens only to our desires. Caloric concentrations surge to new abundance, as diversity and demand rest hand in hand. The threat of dystopian calamity cascades calmly through the contradictions of the time. The world starves in an age of plenty. Profound insights into the nature of consumption and human health buckle under an abundance of information. Gardens grow on city rooftops at scales made laughable by the preternatural provisions of the factory farm. Acres of carefully crafted wheat grow with impossible identicality under the watchful gaze of the ancient summer sun. Culinary trends rise and fall as fads of flavor and diet careen through every vehicle of mediation. Tiny cracks of disease and contamination threaten the fragile and frightening foundations of the webs of supply spinning beneath each product on the store shelf. Hermeneutic plastic packaging covers foods now equally ensealed within the perceptions and annotations of their origins—local, organic, heart-healthy, and ethically sourced. And while the nineteenth and twentieth centuries had been defined by an increasing distance in humanities long affair with food, the twenty-first sets out to squeeze it back together—its forms encased within new understandings of composition, production, and infrastructure.